What’s in a name?

New research from CEM shows that the length of a child’s name is not predictive of future academic attainment. There are few who would dispute that...

Login | Support | Contact us

Mark Frazer : May 8, 2018 1:09:00 PM

4 min read

Since the publication of the results from the most recent Programme for International Student Assessment (PISA) by the Organisation for Economic Co-operation and Development (OECD), many researchers and educational bloggers have drawn attention to, and have questioned, the results. For example, Greg Ashman has discussed the implications of the 2015 study on several occasions.

The 2015 PISA study involved assessing the performance of over half a million 15 year olds in 72 countries using a series of computer-based tasks and questionnaires. The 2015 assessment focused on science, mathematics, reading, collaborative problem solving and financial literacy. Following the publication of the study’s data in December 2016, it has been possible for anyone to examine the findings.

My area of interest is the teaching of science and, in particular, the apparent value of students engaging in meaningful scientific discourse and reasoning with each other.

One item from the questionnaire which caught my attention was: ‘When learning science, students are asked to argue about science questions’. Participants were required to respond by indicating whether this happened either: in all lessons, in most lessons, in some lessons or never or hardly ever.

The data was not as I expected to find it.

There is a widely held view that encouraging students to engage in independent and collaborative work with others is a positive approach, which will improve educational outcomes. Furthermore, it seems to be accepted by many that traditional and strongly didactic teaching styles do not have such an impact. Inquiry-led learning has been popularised and now sits at the heart of many science curricula.

During the past two decades a number of researchers have placed the development of skills related to scientific practice at the centre of science education. For example, Driver, Osborne and Newton (2000), Duschl and Osborne (2002), Kind (2013), Macpherson (2016) all support the importance of argumentation, scientific reasoning and critique by students when learning about science.

By engaging in these practices it is thought that students will learn to more effectively communicate scientific knowledge, improve their procedural understanding and allow them to develop as authentic scientists.

However, it seems that the findings from large-scale studies, such as PISA, do not unequivocally support this view, indeed, they appear to contradict currently accepted theories relating to teaching and learning.

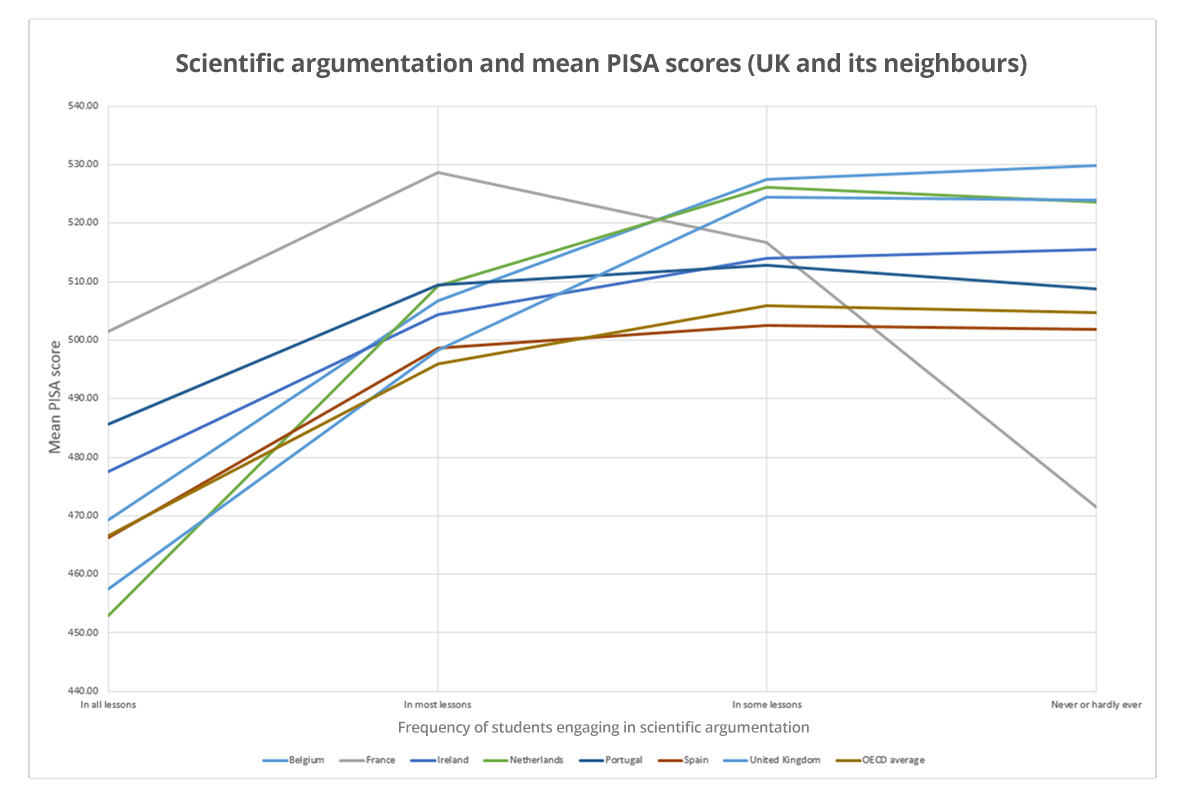

The graph below shows a puzzling relationship between the frequency with which students are involved in scientific argumentation and their overall score in the PISA assessment. The data appear to suggest that scores decrease with more frequent engagement in scientific argumentation. The graph shows only a selection of participating nations (the UK and some of its closest neighbours), but the picture across the world seems to be quite similar. (Apologies to maths specialists who probably will not like my use of a line graphs in this situation, but I think they help to illustrate the point quite well).

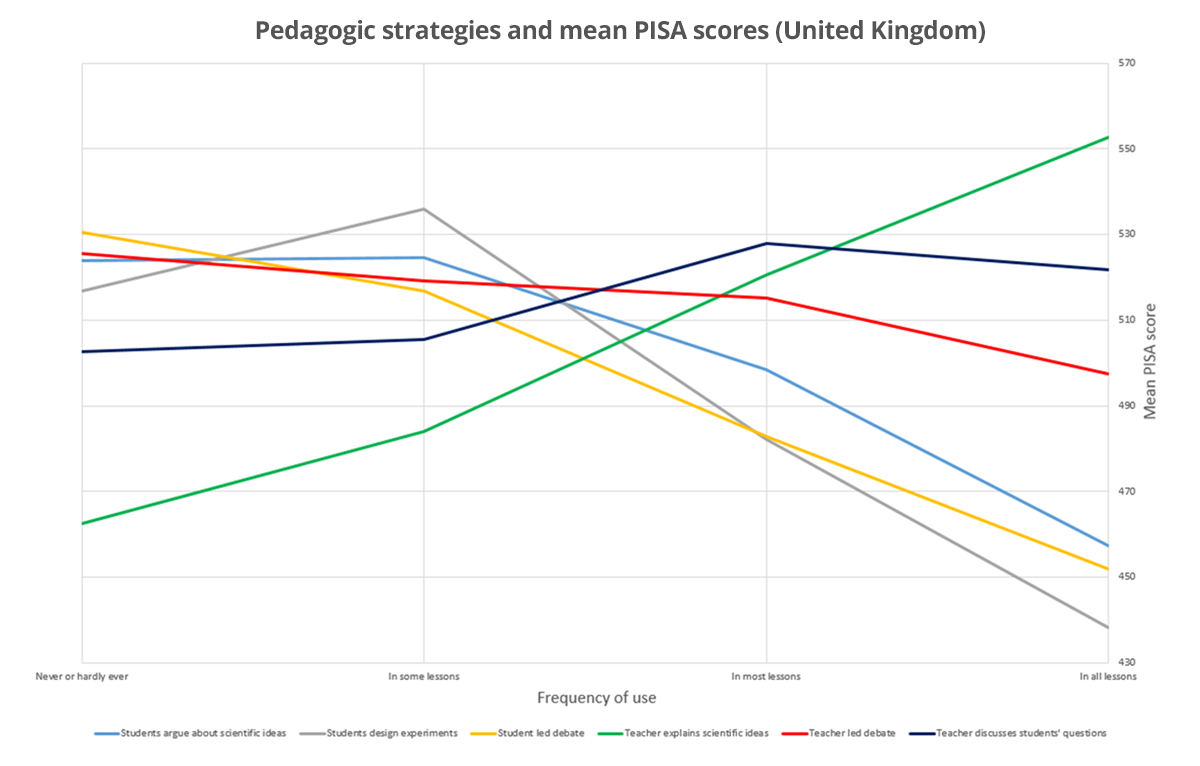

In addition, if we look at the reported frequency of the participating UK students’ involvement in a range of pedagogical strategies and their overall PISA scores, we again see some surprising results.

The data appear to suggest that independent or student led activities do not support outcomes as effectively as more traditional teacher led activities, which seem to have a more positive impact, in terms of overall PISA scores. The green line indicates the impact of teachers clearly explaining scientific ideas to their students. The more this happens, the better the outcome for students.

Amongst these possibly counter-intuitive messages, the graphs do appear to suggest that there is likely to be an optimum combination of teaching and learning strategies. A 2017 report by McKinsey and Company describes this ‘sweet-spot’ or blend of teaching styles which are likely to produce the best outcomes in PISA.

What then, should we remember when looking at these sometimes surprising findings?

Effective teachers are skilled in drawing together different strands of evidence to form an overall impression of how well their students are performing. This is the art of assessment and the most effective practitioners will act upon this collated information, using it to inform their teaching to best support their students, helping them to make further progress.

In the next post, we will explore the evidence behind a blended-strategies model which teachers may wish to consider.

Read 6 Elements of Great Teaching

Download Professor Rob Coe’s presentation How can teachers learn to be better teachers?

New research from CEM shows that the length of a child’s name is not predictive of future academic attainment. There are few who would dispute that...

In February and March 2023, Dr Irenka Suto, Assistant Director of Assessment & Research, and Suzanne Crocker, Researcher & Assessment Products...

by Professors Christine Merrell and Peter Tymms Last week saw the publication of the new online and open-access report, Understanding What Works in...