Systematic reviews and meta-analyses – what’s all the fuss?

Should we be using systematic reviews and meta-analyses as teachers and school leaders to support the decisions we make, or is that a luxury? How do...

Login | Support | Contact us

Professor Steve Higgins : Jun 11, 2019 1:40:00 PM

5 min read

Is meta-analysis in its current state trustworthy enough to be a basis for practitioner decisions?

It all depends on what kind of decisions. Evidence should inform educational decision-making, but should not replace professional judgement. It provides an indication of the pattern of effects which has occurred at other times, in other places and with different teachers and pupils. It can’t determine what should be done in a specific instance, but nor should it be ignored.

I think of it as indicating good bets. Where an approach has cumulative evidence of success it is likely to be a good bet. The larger the typical effect the more likely it is to be beneficial across a range of contexts.

It is also important to understand that both the quality and quantity of evidence varies across different areas of research. We try and give an idea of this in the padlock symbols in the EEF’s Teaching and Learning Toolkit.

In some areas there is extensive research, such as about phonics as a catch-up approach in reading, or the value of meta-cognitive approaches in developing knowledge and understanding. In other areas, such as the best approach to develop parental engagement in a way which improves the educational outcomes for their children, or the value of performance pay to improve outcomes for pupils, the evidence is less robust.

It is also important to remember that evidence can also help us decide not to do some things too.

Approaches such as repeating a year or holding pupils back if they don’t make the grade are not generally a good idea. This is useful to know when making a decision about a particular pupil in a particular instance or when setting a policy at local or national level.

Lots! There are other forms of systematic review for example, which do not include quantitative information, as well as other approaches to the synthesis of findings from research.

The key issues are what the purpose of the review is, how well aligned the methods are with these aims. This helps us be clear about and how the claims or ‘warrant’ of any findings and implications are understood in relation to the aims and approach adopted.

Meta-analysis of experimental trials aims to answer a particular question about cause and effect in education. It is one part of the picture.

All reviews are ‘reductionist’ to some extent, as they attempt to synthesise information from different contexts for a particular purpose.

The advantages of meta-analysis are that it is both cumulative and comparative. The picture builds over time, so we can understand how consistent effects are. It also provides estimates of impact, which give us an idea of the relative benefit of different areas of research. Other approaches have different strengths, but not these two.

There are a number of reasons why evidence is important for the profession.

The first is that it is very challenging in education to work out what is effective because pupils are all maturing and developing through their experiences all of the time.

Understanding progress in learning is like judging how fast people are going on a moving walkway in an airport. It is easier to judge the relative speed of groups of travellers and see who is moving ahead or falling behind. This is like the classroom where it is easier to judge who is making more or less progress within the class. It is very hard to decide how well the class as a whole is doing.

I think evidence is also necessary as a counterbalance to the cognitive biases that we are all susceptible to in understanding what has worked in a situation as complex as education.

Classrooms are hectic places and it is challenging, even as an experienced professional, to be sure about how what you do as a teacher affects how and what pupils learn.

I think of research here as being like a surveying peg when trying to map the terrain. Every now and again we have to try and work out what has actually happened and whether the causal mechanisms we think are operating actually are there. This acts as a check or validation on our beliefs. A randomised controlled trial is like this surveying peg. Joining up the dots between surveying pegs is what meta-analysis does. This then provide a map of a particular field of research.

It is unashamedly a scientific approach. I believe that this is sometimes necessary, but never sufficient to inform professional decision-making. Evidence combined with professional knowledge and experience are both needed to develop teaching and learning in schools.

The final argument is based on the thought that if we don’t promote evidence in education to the profession, it isn’t going to go away. Knowledge is power as the saying goes.

It is therefore important that the practice community is engaged with research and evidence as part of their professional knowledge base. Otherwise the power of evidence will be based outside of the profession and evidence will be used as a mechanism to control professional practice.

We should be cautious, certainly. Evidence is not a panacea, but we should also not be naïve.

If we can’t create an effective partnership with the profession for the communication and understanding of research evidence then others may use that evidence in less collaborative or constructive ways.

I have described the current state of knowledge about teaching and learning though meta-analysis as being like creating a historical map of what we currently know and understand, similar to a mappa mundi.

I think we are only at the beginning of the process of systematising knowledge about teaching and learning. It is therefore important to be clear about its current limitations as well as its possibilities.

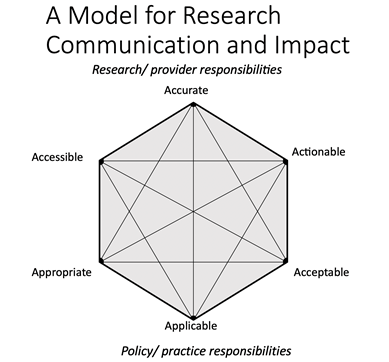

I see research and evidence use as a partnership between researchers and practitioners, each with their own perspectives, expertise and responsibilities (see the diagram below).

When working with teachers and other education professionals I talk about what’s worked (rather than what works) and I use the idea of ‘good bets’ or ‘risky bets’ to try to convey uncertainty. There is a clear trade-off between the accuracy and accessibility here.

The researcher and research community take primary responsibility for the accuracy of the synthesis, the practitioner for the particular applicability with the pupils that she or he is responsible for.

Other aspects are similarly more on one side or the other, so the researcher should take more responsibility for making research summaries accessible, whereas judging how appropriate evidence is for a particular school or class is more on the practice side.

Each of these features are in tension with each other. There is a trade-off between accuracy and accessibility for example or between research findings being both actionable and appropriate. It is only by working in partnership that the research and practice communities can systematically develop the knowledge and expertise to improve outcomes for learners.

Steve Higgins is Professor of Education at Durham University. His main research interest is the use of research evidence to support effective spending in schools. He is the lead author of the Sutton Trust - Education Endowment Foundation (EEF) Teaching and Learning Toolkit . This is an accessible summary of education research that offers advice for teachers and schools on how to improve attainment in disadvantaged pupils by considering the cost/benefit of different educational approaches.

His wider research interests lie in understanding how children and young people’s reasoning develops, how digital technologies can be used to improve learning and how teachers can be supported in developing the quality of learning in their classrooms.

Read Steve Higgins’ new book Improving Learning: Meta-analysis of Intervention Research in Education

Read more about meta-analysis on the CEM blog: Introducing the 50% rule by Lee Elliot-Major

Should we be using systematic reviews and meta-analyses as teachers and school leaders to support the decisions we make, or is that a luxury? How do...

Philippa Cordingley – Chief Executive CUREEPaul Crisp – Managing Director CUREESteve Higgins – Professor of Education, Durham University We are...

1 min read

To my mind, there was something heroic about Gene Glass’ presidential address to the 1976 American Educational Research Association annual meeting....