Quality check: are your assessments giving you the best data?

Schools need to be able to trust that the assessment data they have is reliable and robust. But how can you tell? In this blog post we will look at...

Login | Support | Contact us

Andrew Lyth : Sep 16, 2021 10:31:04 AM

3 min read

Each year CEM processes the results for hundreds of thousands of students participating in our assessments. The results from those assessments are used in multiple ways:

Given the uses of the results, it is clearly important that the information we provide is accurate, fit-for-purpose, complete, timely and easily understood. Achieving this requires a range of quality-assurance processes to be place and draws on expertise from different teams across CEM.

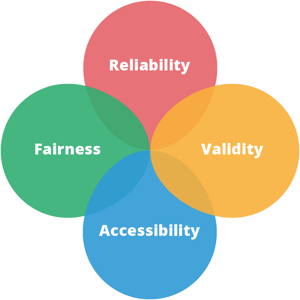

When we develop new assessment questions and new assessments, these must meet several quality control criteria:

When we develop new assessment questions and new assessments, these must meet several quality control criteria:

There may be circumstances which affect a student’s progress through an assessment to the extent that their results may be unreliable, for example if they feel ill, disinterested, or do not complete the assessment in the time available. Where possible we identify these issues, and those results are automatically flagged.

For example, on the Vocabulary and Mathematics sections on CEM’s MidYIS, Yellis and Alis assessments, students’ results must comply with the following:

To make sure that our standardised scores are meaningful, we have a clearly defined target population that we standardise against. For example, for MidYIS and Yellis we provide nationally standardised scores, with the target population being all students in mainstream Secondary schools.

Additionally, to check that our standardised scores are representative of those student populations we need to obtain accurate estimates of the mean and standard deviation of students’ performances in those populations. To achieve this, we use the following techniques:

The predictive data we provide are calculated using data from previous students who have taken a CEM assessment and then later taken external examinations such as GCSE, Scottish Nationals, IB Diploma, or A-Levels.

To be included in the set of subjects we provide predictions and value-added data for, a subject’s sample must meet our quality control criteria in terms of sample size, number of schools, sampling error and correlation.

To ensure that the samples are representative in terms of school sector, we apply weighting factors so that the percentage of students from independent schools matches the national figure of 7%.

As well as making sure that students’ results are accurate and meaningful it is also important that this information can be accessed in good time and that it can be understood and interpreted correctly. Students’ results from our assessments are available in full just a few hours after their completion. Each set of results is presented with a variety of tables and charts with helpful documentation and support.

Many of these tasks are ongoing because over time students change and the education environment for them changes. Additionally, as education changes, the information school leaders and teachers require also changes.

By having ongoing dialogues with our assessment users, we keep our information up-to-date, relevant and useful.

Schools need to be able to trust that the assessment data they have is reliable and robust. But how can you tell? In this blog post we will look at...

Meta-analyses (like the popular Sutton Trust Tool Kit and Hattie’s Visible Learning) apparently offer a more sophisticated and orderly approach to...

By Suzanne Crocker, Product Manager, Cambridge International As human beings we make thousands of decisions everyday based on the information (or...