How schools can engage with research and evidence

It makes sense that the most effective teaching methods are used in classrooms, and that the most effective leadership and governance practices are...

Login | Support | Contact us

Dr Deborah M. Netolicky : Apr 18, 2018 1:08:00 PM

3 min read

Teachers, school leaders, schools, and education systems around the world are increasingly expected to use data to inform practice.

The Australian school system in which I work is grounded in the 2008 Melbourne Declaration on Education Goals for Young Australians. Among other things, the Declaration outlines the need for schools to have reliable, rich, good quality data on the performance of their students in order to improve outcomes for all students by: supporting successful diagnosis of student progress; supporting the design of high quality learning programs; and informing school approaches to provision of policies, programs, resourcing, relationships with parents and partnerships with community and business.

It additionally notes that information about the performance of students helps parents and families make informed choices and engage with their children’s education.

In my state of Western Australia, the School Curriculum and Standards Authority states that schools will use data from school-based assessments, as well as prescribed national and state-wide assessments, to inform teacher judgements about student achievement.

So the use of data to inform teaching and learning is embedded into the Australian education context.

As well as using data to inform teaching, learning, and school leadership, educators are increasingly expected to use evidence-based and research-informed education practices.

Evidence-based practice, which has been around in the medical profession since the 1990s, has been appropriated since the 2000s by the education profession as evidence-based education and evidence-based teaching. Educators working in schools, however, face barriers to engaging with research evidence.

Time is an issue, as the core work of teaching or leading in a school leaves little time for considered immersion in research literature. Cost is another impediment. Most academic journal articles are behind a paywall and many academic books have a hefty price tag.

In response to educators being time-poor and research being hard to access, evidence-based education has become a commodity. Courses, books, and how-to guides are being sold to educators worldwide.

When education evidence becomes marketised, (“buy my book”; “pay to come to this professional development”; “hire our consultants”), we need to ask whose interests are being served: those of students, or those with vested interests in selling solutions to make money?

Gary Jones recently noted on the Cambridge Insight (formerly CEM) blog that evidence-based education is “not just about EEF sponsored randomised trials but requires individual teachers to reflect on how to improve their practice in their own particular school or college”, yet as its basis is in medical science, proponents of evidence-based education often promote quantitative and experimental research methods.

These methods have their own limitations and are not the most appropriate vehicle to answer every research question.

John Hattie and Robert Marzano point to meta-analyses to rank teaching methods and give them an effect size. The UK has the Education Endowment Fund (EEF), the United States has a What Works Clearinghouse, and Australia has Evidence for Learning (E4L) which provides an Australasian take on the EEF toolkit.

These, too, rely on meta-analysis, but meta-analysis has been widely criticised as a tool for deciding what works best in education.

For example, Snook et al. (2009) argue that when averages are sought or large numbers of disparate studies amalgamated, the complexity of education and of classrooms can be overlooked. They also point out that any meta-analysis that does not exclude poor or inadequate studies is misleading or potentially damaging.

Terhart (2011) points out that focusing on quantifiable measures of student performance means that broader goals of education can be ignored.

Simpson (2017) notes that meta-analyses often do not compare studies with the same comparisons, measures and range of participants, arguing that aggregated effect sizes are more likely to show differences in research design manipulation than in effects on learners.

Wiliam (2016) states that “in education, meta-analysis is simply incapable of yielding meaningful findings that leaders can use to direct the activities of the teachers they lead” (p.96). Wiliam (2018) also points out that, in education “what works is generally the wrong question, because most ideas that people have had about how to improve education work in some contexts but not others…everything works somewhere and nothing works everywhere” (p.2).

For full disclosure, my own research is qualitative in nature and seeks to describe and understand the human aspects of education, rather than to identify large patterns or averages.

A multiplicity of research approaches provides diverse ways of understanding education, but we need to interrogate the approaches and arrive at conclusions with caution. Teachers’ wisdom of practice and immersion in their own contexts needs to be honoured.

Context and praxis matter in education.

Researcher, school leader, and teacher Dr Deborah M. Netolicky has almost 20 years’ experience in teaching and school leadership in Australia and the UK. She is Honorary Research Associate at Murdoch University and Dean of Research and Pedagogy at Wesley College in Perth, Australia. Deborah blogs at theeduflaneuse.com, tweets as @debsnet, and is a co-Editor of Flip the System Australia.

Listen to Prof Rob Coe’s presentation ‘Translating Evidence into Improvement: why is it so hard?’

It makes sense that the most effective teaching methods are used in classrooms, and that the most effective leadership and governance practices are...

In education, translating evidence into practice is a process which involves everyone, from classroom-based teachers, to school leadership teams,...

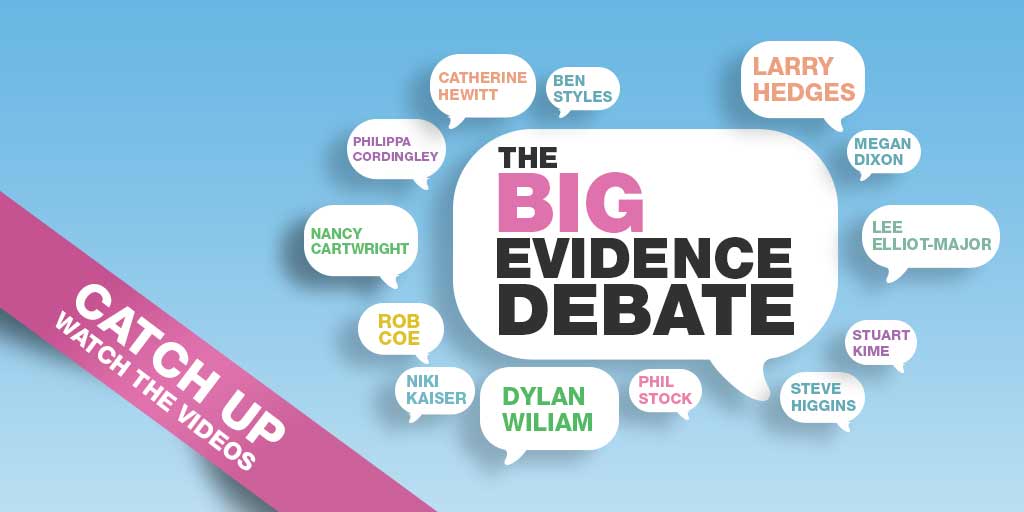

On Tuesday 4th June 2019 we held the first ever Big Evidence Debate. Leading educational experts Dylan Wiliam and Larry Hedges gave fascinating...